“Test-Driven Democracy” — A DevOps Analysis of the Iowa Democratic Caucus

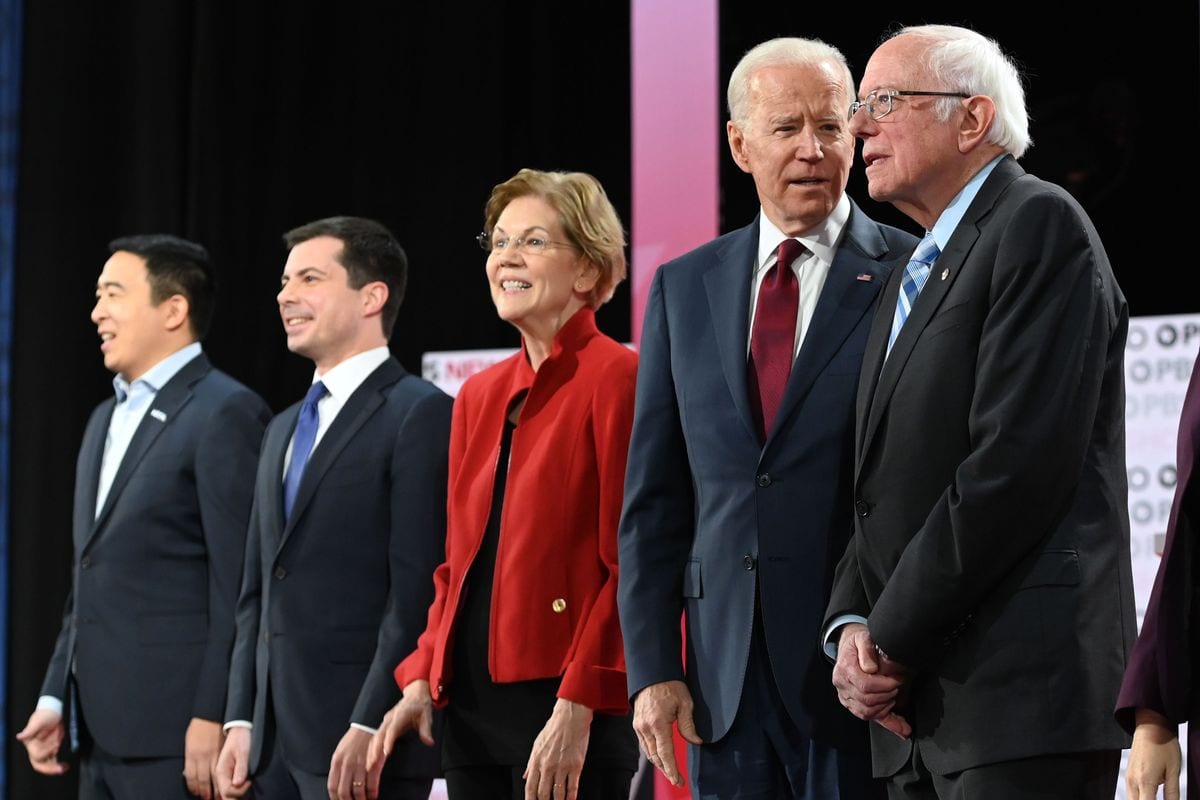

Unless you’ve been living under a rock news-wise in the United States, this has been a very hefty week in American politics, topped off with an Impeachment vote that may, or may not, determine the future of the country in the modern era. However, what I’m going to talk about is possibly a little less “wonk-ish,” and that is of course the Democratic Party’s Iowa caucus on February 3, 2020, whose results were delayed by technical issues, which until even two days post-caucus, haven’t yet been fully disclosed.

Prior to Iowa’s public meltdown, I had moderated a panel on election security at the ShmooCon security conference in Washington, DC where we highlighted several threats (and their mitigations) to the upcoming election cycle. We noted that the typical focus of press and candidates has been on the well documented ability to essentially “hack” various physical voting machines by multiple means, and indicated that the likelihood and overall complexity and sophistication required to do this at scale is a near improbability, even though the vulnerabilities exist. However, we did conclude that one of the greatest risks to the further erosion of trust by the American public in the electoral system, and our democracy, was impacted more by messaging. So, what do I mean about that?

Nearly every news story, because they have been conditioned by what most likely occurred in the 2016 election, and continues to this day, that the process was affected by external factors. Those issues ranged from potential tampering in systems supporting the election — notably voter registration systems used by states — electronic poll books, and other sustaining infrastructure, as well as a sustained disinformation and media manipulation strategies by the same adversaries. This was well documented and investigated by our governmental security and law enforcement apparatus. Several reports were issued, but they didn’t actually address those incidents as points in time. Rather, the reports focused on the next election cycle, including the midterms, and this year’s presidential election.

This article is not focused on that particularly, but rather how we, the public, media, and leaders, speak about events that affect and impact the processes supporting these election cycles. As previously mentioned, we just completed a caucus that was plagued by their reliance on a new capability that, by all means, did not undergo the rigor typically associated with a high-visibility and high-impact technology solution. This is what I intend to focus on, and I’ll address ways that this could have gone better, and how we need to speak about such issues in the public space in the future, especially when the stakes are so high.

The impetus for this post was me literally yelling at my radio during an interview I was listening to on National Public Radio, which had Betsy Cooper from The Aspen Institute as a guest. The selection of the speaker’s angle within the discussion was nearing as irresponsible as you could get in order to satiate the news cycle. As mentioned, those of us on the panel at ShmooCon have concerns and issues with security of our election apparatus, but the immediate ease of finger pointing and saying “it must be a hack” is lazy.

Software development isn’t easy. Anything typically more complex than a “Hello World” program requires a level of rigor, discipline, and skill to produce something that is usable, both from the standpoint of the person expected to interact with it, to the systems supporting its operation. How do I know this? Because prior to my current role, I have led teams to deliver solutions in the public and private sector for over twenty years, including planning, architecting and securing them from abuse and misuse.

In Iowa, the desired need was to produce a system to collect, record and report results for the Iowa Democratic Party caucus. Sounds simple, and at its foundation, the system is merely tabulating a count of results in a verifiable and trustworthy manner. As some have pointed out, this is what you would do with a spreadsheet program, and they’re not too far off. But, the process is a bit more complex, and more drawn out than simply expecting people in the various counties to open Microsoft Excel or Google Sheets and count how many people raised their hands when asked who they prefer to run for the nomination to be President.

First off, we’ve already defined the space we are attempting to operate in. We’re attempting to build a system for the citizens of Iowa to tabulate a preference, in an easy and verifiable manner, which is tamper resistant, and can be reported to an aggregator — notably the Democratic Party in Iowa. The same process would exist in similar form for the Republican Party if the primary candidate was not running unopposed. But, if you need greater detail on the caucus process, please read the Wikipedia page on this particular process, which does sum it up, but with greater detail. This does provide us with what we call, in Agile software development, a “user story”, and in this case several, as the users here are the caucus tabulators (those which are doing the counting in the 1,681 precincts), the party aggregators who receive the tabulations from each precinct, and the media, who will receive the final counts after the tabulations.

Sounds pretty simple — and while my second computer science class in college asked us to do 3D shading for wireframe models, I’m sure having undergraduates crank out a spreadsheet program as part of a project there is not out of the question. This is obviously a sarcastic and simplistic view of the problem, because it also misses the other aspect of the data collection, which is collecting the results, verifiably, through multiple rounds (the “alignments”), and then securely sharing those counts and tabulations with the central party for dissemination to media outlets.

Now in aggregate, as a sum total, a few small counting errors may not significantly shift the outcome, but as the fidelity of the count decreases the impact is greater. Let me explain.

For those 1,681 precincts, the caucus system allows for you, your neighbors, and their neighbors to come together and persuade each other that one candidate is the better choice over another. For example, say for each precinct, you had 500 people show up, so extrapolate that out among the precincts, you have a total caucus population of about 840,500, which is a little more than 25% of the state population, accounting for party alignment, willingness to participate in the caucus, voting eligibility, etc. Each precinct goes through several alignments, which is, rounds of voting, electioneering, and eventual tabulation. Right there is the initial process to model, or the workflow of the system. It’s subject to less scrutiny because the electioneering, or convincing of others about the positive qualities of their candidate, is done openly, as by design. The last part, those numbers are the critical pieces and result in how many delegates are awarded to each candidate for the party nominating convention, so that’s why there’s importance placed on getting this right.

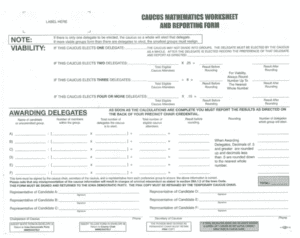

That 840,500 population gets less “effective” due to how each precinct is tabulated, as it’s not the votes that are counted, but the percentages of the alignments of those votes, such as 30% to candidate A, 25% to candidate B, 20% to candidate C, and so on. Again simplistic, given the formula, but the delegates awarded are essentially results of those percentages. So the 500 precinct voters are reduced to a breakdown of how many delegates the precinct is awarded, say, 20 (even though the actual number is more than half that, but the example is still valid). So if somebody won 30% of the precinct, they would get awarded 6 delegates, even though that equated to 150 of the 500 people voting for them. That is quite the reduction, and spread out among all precincts, those small percentage differences and awarded delegates have a greater impact due the fidelity loss. Even worse, due to the weighting formulas, there’s rounding issues that come into play, namely to address a concept titled “viability” here. See the following caucus sheet used as an example. Not so simple or equitable when you look at it.

(source: https://www.npr.org/2016/01/30/464960979/how-do-the-iowa-caucuses-work)

However strange, this sheet is not mirrored in the application used by the Iowa Democractic Party during this caucus, and from screenshots, appears to be merely collecting these paper tabulations and reporting it. Now, skeuomorphism, or the near exact modeling of real-world physical interfaces within a computing or virtual environment, is definitely not a goal or intent in such cases. The interaction of entering, calculating and reporting such information, and the workflow as it were, needs to be similar enough due to the expected end-user population, and familiarity and comfort with working with the computing system. When Apple decided to roll with a skeuomorphic interface elements, such as graphical wood textures and paper tablet elements for some of its operating system components, they were handily derided by developers, designers, press, and users. The key element of such a user interface translation in this case is not that this was paper tabulation, but that there were fields for which the data can be entered, and that the application performed and outputted data that mirrored the results of a more “tactile” (read: pen and paper) calculation.

So we’ve covered the challenge of user stories and requirements gathering, user interfaces and experience, and some basic workflow modeling. You do know what this begins to sound like? A DevOps model for application and service design. To complete this, and to make it into DevSecOps (because this is democracy), let’s look at security, deployment and operations, and where most of what happened in Iowa failed, but not in the expected way.

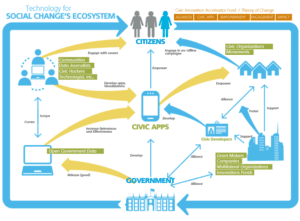

Let’s touch on one piece of this puzzle, which has gotten more than a few column inches in the past few days, but where the mark has been clearly missed. The concept of “civic tech” can encompass many things, and usually involves independent or politically (and/or issue) aligned organizations to provide technology and subject matter expertise. Sometimes it may be dealing with data collection and analysis, or outreach and engagement, and other times its architecting, developing and operating infrastructure to support those core activities.

Most civic tech companies are small startups, usually existing on initial investments until a major client is scored or they are absorbed by a larger organization. They are often run by folks who have a campaign affiliation, and use that to capitalize on both funding and reach into campaigns or initiatives. While the motives, externally, seem very altruistic, there’s very little that separates them from the motivations of your Silicon Valley start-up of the past 30 years. This also seemed to be the case for the company tasked with developing the app for the Iowa caucus at behest of the Iowa Democratic Party.

(source: https://medium.com/intelligent-cities/local-government-and-the-civic-tech-movement-513f53ba26b1)

Now I’m not going to chase down the ownership, operations, management, and investment of this company and associated entities. The key takeaway is that while it plays in this space, much like a new startup, it hasn’t had that “big hit” or “killer app”, and this was its attempt to grab the brass ring.

Consider the story of Icarus, who flew too close to the Sun and fell to his death. The general aspect here lies in a little bit of hubris and overextension of capabilities. This core tenet of leadership and management is to manage expectations, and don’t over promise and under deliver, especially when the outcome is high visibility and provides a critical capability.

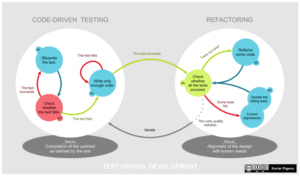

Agile development frameworks, when adopted to project management, are really great with the breakdown of delivery cycles, providing bite sized achievements and measurable goal progression, than the big bang method that predated the rapid application development (RAD), extreme programming (XP) movement, and lean software developmentpractices. Much like missing the core concepts of Icarus’ myth, some also equate the “fail fast, fail forward” premise of RAD that it’s just fail fast, with little time left to learn and integrate as part of the iterative design and development process. This is similar to the operations and security world, by merely reacting to, and cleaning up an incident, but not completing a root-cause analysis and bringing those results into eventual table top exercises to test to see if, when possibly encountering the scenario again, they are able to handle it in a more prescribed and efficient manner, or even go as far as to mitigate or eliminate the chance of it occurring ever again. This is the main part of test-driven development, and where the title of this article derives its meaning from.

(source: https://en.wikipedia.org/wiki/Test-driven_development#/media/File:TDD_Global_Lifecycle.png)

Test-driven development is far from new. In fact, it evolved out of the culture of places such as NASA, rather than just out of the extreme programming concepts of the late 1990s. It’s more of a quality control practice, but when combined with other concepts and methodologies, and applied to software and systems development, it keeps down feature creep and refines deliverables for the release gates of projects.

However, because the concept of DevOps is still relatively nascent, the roping in of operations and security, kept the concept primarily in development lingo rather than in deployment, which is what is seen in DevOps and DevSecOps practices. Initial comments and complaints by those who were asked to use the caucus application, was that it arrived not via the normal platform app stores from Apple and Google, but via their test platforms of TestFlight and TestFairy usually reserved for alpha and beta testing. Now, this isn’t actually indicative of anything quality-wise on its face, but more of a rushed deployment to production process. As many technologists know, that this is the smoke to a larger fire. These tools also work via a process of sideloading these applications on destination platforms, which is essentially bypassing many of the automated readiness and, in many cases, security checks usually reserved for final, production quality applications. When production deployments to customers and users occur in such a way, you are DevOps and Agile in name only, and have eschewed what is generally positive via these techniques and frameworks.

Since we touched on the subject prior, and, as this article was born out of a security discussion, let’s cover that topic now. There’s plenty of articles that will focus on the fear of the process security and whether or not this was a result of an attack or other malfeasance, but Motherboard has a pretty good wrap-up on where the risks actually lie with the process the developers absorbed, including not selecting the enterprise-level testing tier which did not include single sign-on (SSO) for use to verify and authenticating users of the app and service. But, why is this seemingly very “techie” feature so important and may add some credence to the suspicions of malfeasance?

There’s a concept titled “zero-trust” network/computing which was essentially coined by the analyst firm Forrester and taken to the extreme via the BeyondCorp model by Google. That model changes the traditional networked computing model where there’s an intrinsic trust on the network, to one that no one and nothing on the network is trusted. With the advent of mobile devices and cloud computing, organizations are regularly accessing services and other capabilities they have no direct management or control over, including the transport networks and servers. These systems operate in a trustless or zero-trust environment, where the only trust that is and can be established is through strong, reliable and resilient methods of identity and access management. This typically requires some form of identity proofing based on what you wish to have access to and where, as well as the sensitivity (including the required confidentiality, integrity and availability) of the data and systems. Whenever you are asked to sign up for a new service, typically your identity proofing only goes as far as putting in an email address, but as that required access control and security levels rise (based on what the system owner decides) higher levels of assurance are usually required. This can be from providing multiple proofs of identity, such as matching names, birthdates, addresses, and even photos, with known prior proofs or go as far as requiring an independent investigation to prove that the user requesting access is who they say they are, and is trustworthy.

Now, for such a process as a caucus, and the non-personally identifiable information being exchanged, the assurance level to identify end users is probably not high, but would still require some form of registration and identification to ensure the person submitting the data is the correct one authorized to do so. By using SSO, such as allowing you to login via Facebook or Google, and offloading the proofing to another semi-trusted entity, you are assuming that person is who they say they are. It appears that, in light of a potential glitch happening, the precinct PIN, or personal identification number, were printed on precinct worksheets, and by assumption, would act as another factor in identification either by phone reporting or during the login process via the application.

Without a copy of the application at this point, one can only guess that this is the most logical way to quickly and cheaply provide this type of capability via either method. But as noted in the Motherboard article, the selection of the free tier for the Android testing platform, this capability was not available, either to authorize downloading and distribution of the application, nor for the users within the application. To add insult to injury, several Twitter users were retweeting photos of precinct tabulation sheets with the PIN codes unprotected/non-obfuscated. In short, even if SSO was enabled, the process and methods also contained a flaw via the traditional methods, or at least those utilized who were supposedly authorized.

When things fail so spectacularly without even discussing performance and stability issues, you’ll probably guess that there are problems at this stage too. Part of test driven development can and should contain some regression and performance testing, using automated tools, or at least what is termed as a test harness that accounts for everything that should tested for in a normal, expected operating mode, and if any changes had an adverse effect. This allows for the comparing of results, the identification of potential faults, and given the inputs of the test cases, that the processing of the data in the system produces the expected output. Skipping this, or not having it as part of the process, is not only risky, but borders on malpractice as a developer.

I noted earlier about the value and requirements for the system to maintain confidentiality, integrity, as well as the availability of the data and system, commensurate with how the system is being used, and what is getting processed. This is a common part of the security assessment and evaluation process that should be performed during the development of the system architecture, but also during testing to measure the resiliency of the final system. News has started to come out, as results are beginning to be verified and reported, that, this too, is in question, as those numbers, even after initial release are being revised because the results from the app-reported numbers did not match paper-reported numbers.

In an exacerbation of the prior evaluation of a short-circuited testing cycle, this begins to add more credence to lack of testing, end to end, with users, and even a proper set of user stories to make up what is critical in sprints to deliver a minimally viable product (MVP) stage were probably not adequate created or managed. If this wasn’t done, odds are also on the fact that no significant security architecture, other than what could be bolted on as components and libraries, and those exposed as best practices from consumed services (such as, possibly Amazon S3), was developed and no in-depth security testing, given the previously reported development and release timeline, was completed. Adding insult to injury, the backup, out of band reporting mechanisms in place for non-digital reporting failed due to what is termed as over subscription in tech circles, but basically boils down to, the phone number was busy. If it’s part of your system, even as a backup, termed continuity of operations, was not tested, it really wasn’t part of the overall system architecture to begin with, and again, fails the concepts of Agile and DevOps.

(source: http://twitter.com)

Now, with all of this considered, can there be lessons learned in order to not have this happen again? Sure. Would it be bad to go as far to say that ACRONYM, the company whose task it was to deliver this system to Iowa Democrats should not be trusted and should be abandoned as a supplier? No, not really, and here’s why. Most likely, when the systems or services you have become most accustomed to, Facebook, Twitter, Google, Microsoft, Amazon first took to the Internet to begin operations, there were a lot of failures. Even to this day they occur, just with less frequency, because they learn and improve from those incidents. I mentioned before that Agile processes in software development gain strength from the iterative nature of the testing, and requirements development feedback loop to make incremental progress to a shared goal among developers and clients. You can still run into a Twitter fail whale, the inevitable doggy error page on Amazon, and even in a few cases, just regular, time tested and near regular updates to infrastructure at Google and Microsoft can have cascading outages. Have we abandoned them? Probably not, but because those failures have been reduced in frequency, from adopting and learning from the models, we tolerate it (and those organizations have become more transparent about them). Was it all on ACRONYM to get it right the first time? Again, modern software engineering should not occur in a vacuum, and to that conclusion, ask who approved the apps for release to the precinct staff prior to the caucuses. I doubt anybody at the company had that level of authority, and in most cases, probably shouldn’t, and this highlights the last issue that I will address in DevOps and Agile, which is user-centric design.

It’s important to separate user experience (UX) and user interface (UI) design from user-centered/centric design (UCD). As seen below, brazenly borrowed from Minitab, is generally the flow methodology on a design cycle that incorporates UCD principles. Note that much of this mirrors missteps that appear to be prevalent about the story of the Caucus app’s failure.

(source: https://blog.minitab.com/blog/what-is-user-centered-design-and-why-is-it-important)

But, as mentioned, software engineering should not occur in a vacuum. That requires participation by the customer, the end users, and the developers to ensure what has been researched, defined, and ideated, shows up in the protype, which can be tested and then implemented through various iterations in the final deliverables. If you don’t do this, you will inevitably deliver something that does not meet the needs of the customer and user. As noted in the reported stories, the award for this development effort was granted in November 2019, and delivery was to occur mid-January, possibly 90 days, more likely 60 days, and given three major holidays occur during this time, more like 45 calendar days, six weeks, and 30 actual weekdays. That is the rough reality of the development cycle for this application, from design to delivery in 30 days. Most sprints in Agile are on a two-week cycle, so this app was delivered after two sprints, and very doubtful this included a test cycle, design sessions or anything typically associated with UCD.

During those four weeks, the initial coding, templating, infrastructure integration, and testing needed to occur. Now the wild-card in all of this was the possibility of an off the shelf caucus app, which was merely a customized UI on top of a basic tabulation back-end. But, if that was the case, there’s a higher demand on customization and integration, let alone certification of the operation, and that is highly unlikely, or if it was, a definite poor selection as a way to deliver such a critical system. Part of the development, integration, deployment and operations process should also involve instrumenting your processes as pipeline. By pipeline and process, I mean sampling and monitoring results from code check-ins, test results, performance metrics, and evaluate and address failures, faults and performance issues. If that is not part of the system, when it fails, as it did, it’s extremely difficult to identify the point of failure, address it and address it in subsequent iterations of the application and service, as well as come to a root cause analysis probably now demanded by the customer.

While I have documented in this article a hypothesis of what probably happened based on reporting, they are merely hypothesis based on the observability of information coming from users, the press and other sources. How would I go about identifying that this was what happened, explaining what should have been done, and what best practices are — in short, “why should I listen to you?”

Well, I’ve been doing most of this, from various aspects of technology development, delivery, security and operations for most of my career, which is, nearly a quarter century in its length. I’ve contributed to open source projects, ran operating groups for IT and security in both small startups and Fortune 125 companies. I’ve worked five Federal agencies as well as working as a cybersecurity advisor to the Federal Chief Information Officer at the White House. During that stint at the White House in the Office of Management and Budget, I reviewed and provided feedback to cybersecurity legislation from the US Congress, provided technical input on the response to the Heartbleed and Shellshock vulnerabilities, and was a co-founder of the US Digital Service, whose charter was to introduce and integrate concepts such as Agile and UCD into the Federal IT environment.

Prior to the role I currently have, I was a Deputy Chief Information Officer responsible for strategic planning, budget and operational efficiency for the US Department of Health and Human Services, Office of the Inspector General, and most recently the Chief Technology Officer for the same agency delivering applications and services to our staff and the public using those same techniques outlined in this article. This, by any means, doesn’t mean you have to believe what I wrote, but it comes from both a background supporting the public as a civil servant and an individual who lives these issues every day. I know we can do better because we’ve done better.